August 2021 introduced several changes to the test of antimalware applications. Our methodology remained unchanged, so we continued to use only real threats that can be found on the Internet and email attachments on daily basis. Unlike previous editions of the test, this time we used about 80% more malware samples than usual. We also improved the initial verification of malware before the sample analysis that qualifies to the test. In this test as well as the following, we were using the Yara rules in order to better pick harmful software, and at the same time reject unclear, damaged samples. And all this without initial verification in the Sandbox (which will save time and allow to test more samples). We will describe the details shortly by completing the documentation of methodology.

During the August edition of the Advanced In The Wild Malware Test we used over 2200 samples of malware and tested the protection effectiveness of the following software:

- Avast Free Antivirus

- Avira Antivirus Pro

- Comodo Advanced Endpoint Protection

- Comodo Internet Security

- Emsisoft Business Security

- Microsoft Defender

- Panda Dome

- SecureAPlus Pro

- Webroot Antivirus

Levels of blocking malicious software samples

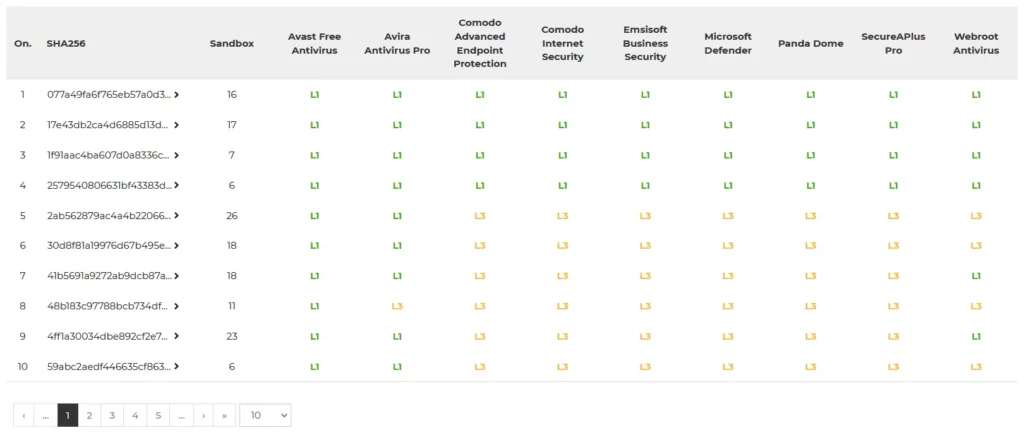

We share checksums of malicious software for researchers and security enthusiasts by dividing them into protection technologies that have contributed to detect and stop a threat. According to independent experts, this type of innovative approach of comparing security will contribute to better understand differences between products available on the market.

Each malware sample blocked by tested protection solution has been divided into few levels:

- Level 1 (L1): The browser level, i.e., a virus has been stopped before or after it has been downloaded onto a hard drive.

- Level 2 (L2): The system level, i.e., a virus has been downloaded, but it has not been allowed to run.

- Level 3 (L3): The analysis level, i.e., a virus has been run and blocked by a tested product.

- Failure (F): The failure, i.e., a virus has not been blocked and it has infected a system.

The products and Windows 10 settings: daily test cycle

Tests are carried out in Windows 10 Pro x64. The user account control (UAC) is disabled because the purpose of the tests is to check the protection effectiveness of a product against malware, and not a reaction of the testing system to Windows messages. Other Windows settings remain unchanged.

The Windows 10 system contains installed the following software: office suite, document browser, email client, and other tools and files that give the impression of a normal working environment.

Automatic updates of the Windows 10 system are disabled in each month of the tests. Due to the possibility of a malfunction, Windows 10 is updated every few weeks under close supervision.

Security products are updated one time within a day. Before tests are run, virus databases and protection product files are updated. This means that the latest versions of protection products are tested every day. All antivirus applications had access to the Internet during the tests.

VirusTotal vs real working environment

We use real working environments of Windows 10 in a graphic mode, that is why the results of individual samples may differ from those presented by the VirusTotal service. We point that out because inquisitive users may compare our tests with the scan results on the VirusTotal website. It turns out that differences between real products installed on Windows 10 and scan engines on VirusTotal are significant.

Malicious software

In July, we used 1311 malware samples for the test, consisting of, among others, banking trojans, ransomware, backdoors, downloaders, and macro viruses. In the contrast to well-known testing institutions, our tests are much more transparent – we share to the public the full list of harmful software samples.

The latest results of blocking each sample are available at https://avlab.pl/en/recent-results/ in a table:

Summary of the September 2021 edition of the test

The correct interpretation of the test is not clear at first glance. Yes, all applications have achieved almost a 100% protection against so-called samples in the wild which can be found in the Internet on daily basis. These samples are often similar to each other, and detected by generic signatures. During the test, there are also threats that are not recognizable by the producer concerned.

This is best shown by the so-called Level 3, because during this analysis a virus is already running, and we expect the tested application to react correctly. In other words – if a sample hasn’t been blocked by scanning in a browser or when it has been moved from A to B location on the system disk, after it has been run we can conclude that the file has not been known to the producer. For this reason, malware could not be detected without a prior, deep scan, so the signature protection was insufficient. Not every antimalware product can analyze a file before it is saved on the computer disk, so it sometimes has to be run locally or in the cloud to perform automated analysis.

Moving on to the substance of this summary, it is worth noting the Level 3 which shows real protection against 0-day threats. But be careful! Some antimalware applications do not have browser or even signature protection which could result in a lower score if the test was not interpreted correctly.

In our tests, we do not award negative, or positive points (if a threat has been blocked early). Simply antimalware solution should block a threat in any manner intended by a producer. The final result is whether or not it was possible to do this correctly using any protection modules.