This is the last edition of the long-term series “Advanced In The Wild Malware Test” in 2023. During this multi-month analysis, conducted 6 times a year in a real environment, we focus on evaluating the effectiveness of software to secure Windows against realistic threats originating from various sources on the Internet.

November 2023 was marked by PUAs/PUPs which by a reason of their nature are not malware, but can cause some damage to users with limited technical knowledge. The scale of potentially unwanted software is evident from the fact that almost 25% of web addresses in-the-wild included installers for browser extension, toolbars, or other applications that can be intrusive.

In the end, 245 samples of genuine malicious software remained. The most interesting among them include the following families:

- Doina

- Jaik

- Boigy

- Fugrapha

- VBA. Trojan.d

- Mint.Zard

- MSIL.PasswordStealerA

- CryptZ.Marte

- Strictor

- Shellcode.Loader.Marte

To classify threats, we use feedback from the antivirus engine of our technology partner, MKS_VIR sp. z o.o. Interestingly, as many as 74/245 samples were identified as 0-day (having no assigned signatures yet).

Our tests comply with the guidelines of the Anti-Malware Testing Standards Organization. Details about the test are available at this website as well as in our methodology.

How do we evaluate the tested solutions in 3 steps?

In a nutshell, the Advanced In The Wild Malware Test covers 2 large aspects that follow each other, and combines into a whole:

1. Selecting samples for the test and analyzing malware logs

We collect malware in the form of real URLs from the Internet on an ongoing basis. We use a wide spectrum of viruses from various sources, and these are public feeds and honeypots. As a result, the test covers the most up-to-date and diverse set of threats.

The analyzed samples in Windows are subject to thorough verification based on hundreds of rules – the most common techniques used by malware creators (LOLBINs). We monitor system processes, network connections, Windows registry, and other changes made to the operating and file system to find out what truly indicate the harm of a given sample during its analysis.

In the next step, each malware sample on operating systems with installed security products is downloaded from the actual URL by a browser at the same time.

What happens next?

2. Simulate a real-world scenario

In the test, we simulate a real-world scenario of a threat entering the system from a URL. It can be a website prepared by a scammer or a link sent to a victim via messenger, email, or document. The link is then opened in the system browser (we use Firefox).

The final result consists of two scenarios for blocking a given threat:

- If a link to a file is quickly identified and blocked in a browser, then we assign the result to the so-called PRE-LAUNCH where a threat is blocked at an early stage, even before it is launched.

- POST-LAUNCH: If malware is downloaded, allowed to run, and successfully blocked, we assign this level by assessing the real effectiveness of the security product against threats, including 0-day.

While the Pre-Launch level indicates malware that was quickly detected and blocked before activating its malicious payload, the Post-Launch level refers to a threat caught by any developer’s technology (local or in the cloud) after being executed in the system. It should be emphasized that the best solutions at this level are those that have differentiated protection in the form of multiple layers of security.

3. Assessment of incident recovery time (Remediation Time)

Next, in addition to detecting and blocking 0-day threats, we calculate the time required for the automatic recovery from an incident for a given malware sample (Average Automatic Remediation Time).

We configure the tested products in a way that the removal of the attack’s and system repair is automatically undertaken without asking the user for a decision.

Ultimately, for each malware sample – starting from its execution, we measure the time it takes to detect malicious activity to the automatic recovery from the incident.

Results in November 2023

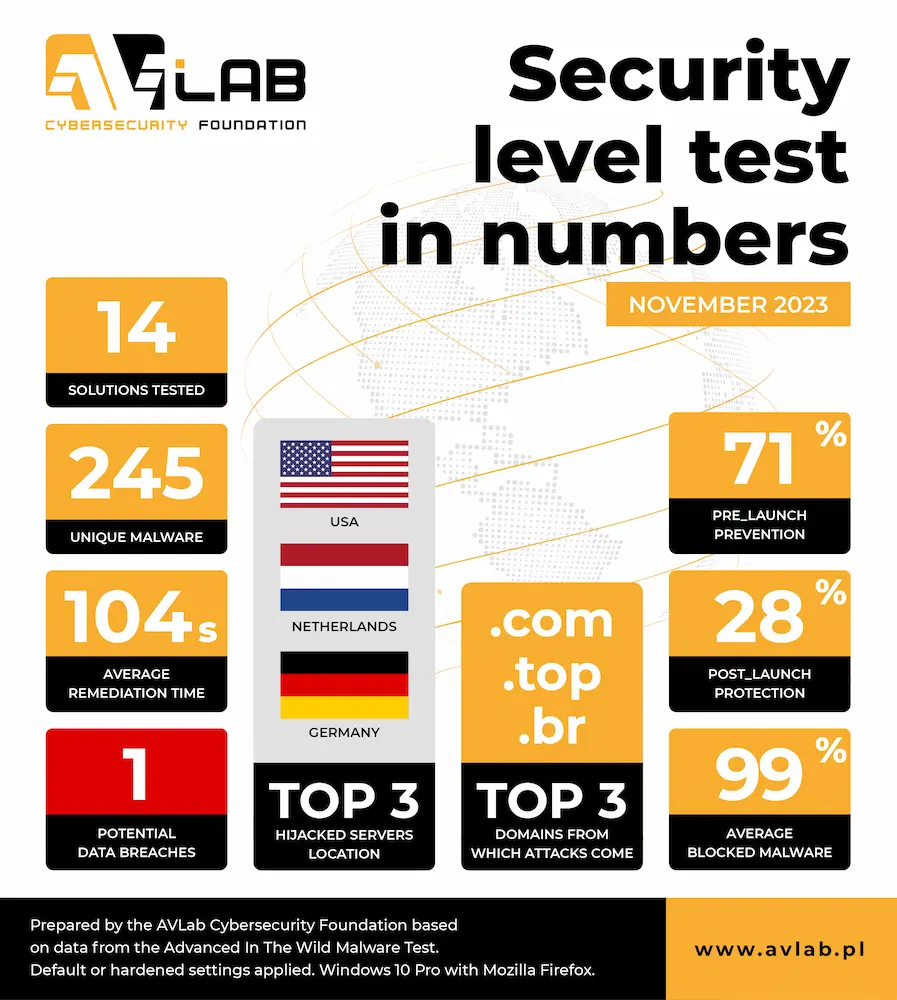

In November 2023, we used 245 unique malware samples from the internet.

Tested solutions to install at home and in a small office:

- Avast Free Antivirus

- Eset Smart Security

- F-Secure Total

- G Data Total Security

- Kaspersky Plus

- Malwarebytes Premium

- Quick Heal Total Security

- Webroot Antivirus

- Xcitium (Comodo) Internet Security

- Hidden name 1 – private test 1

- Hidden name 2 – private test 2

Solutions for business & government institutions:

- Emsisoft Business Security

- ThreatDown Endpoint Protection

- Xcitium ZeroThreat Advanced

Key technical data and results are available on the RECENT RESULTS webpage, along with a threat landscape that has been prepared based on telemetry data from the test.

Threat landscape of the test in a nutshell

We have prepared the following overview based on the telemetry data:

- 14 protection solutions took part in the test.

- Finaly, we used 245 unique URLs containing malware.

- During the analysis, each malware sample took on average 63 potentially harmful actions in Windows 10.

- Most malware originated from servers located in the USA, Netherlands and Germany.

- The following domains were mostly used to host malware: .com .top, .br.

- The average time of automatic remediation of the effects of an incident by all developers was: 104 seconds.

- The best Average Automatic Remediation Time was achieved by F-Secure with time of 6 seconds.

Step-by-step methodology

We are systematically developing the methodology to keep up to date with new trends in the security and system protection industry, which is why we provide links to the full methodology:

- How do we acquire malware for testing, and how do we classify it?

- How do we collect logs from Windows and protection software?

- How do we automate all of this?

We are a member of the AMTSO group, so you can be assured that our testing tools and processes comply with international guidelines and are respected by developers.

Conclusions from the November 2023 test

From November 1st 30th, 2023, we worked on the latest edition of the “Advanced In The Wild Malware Test”. Over the course of this year, we have acquired a lot of telemetry data from the operation of security products and malware. This will allow us to prepare a year-round compilation. We ask you for a moment of patience – the summary of the Advanced In The Wild Malware Tests from 2023 will be published after the new year.

Characteristics of “In The Wild” threats

Overall, the malware qualified for the test were predominantly Trojans which pose a serious threat to the security of computers running the Windows operating system. They can steal personal information, control a user’s computer, send spam, download ransomware, keyloggers, and perform unwanted activities.

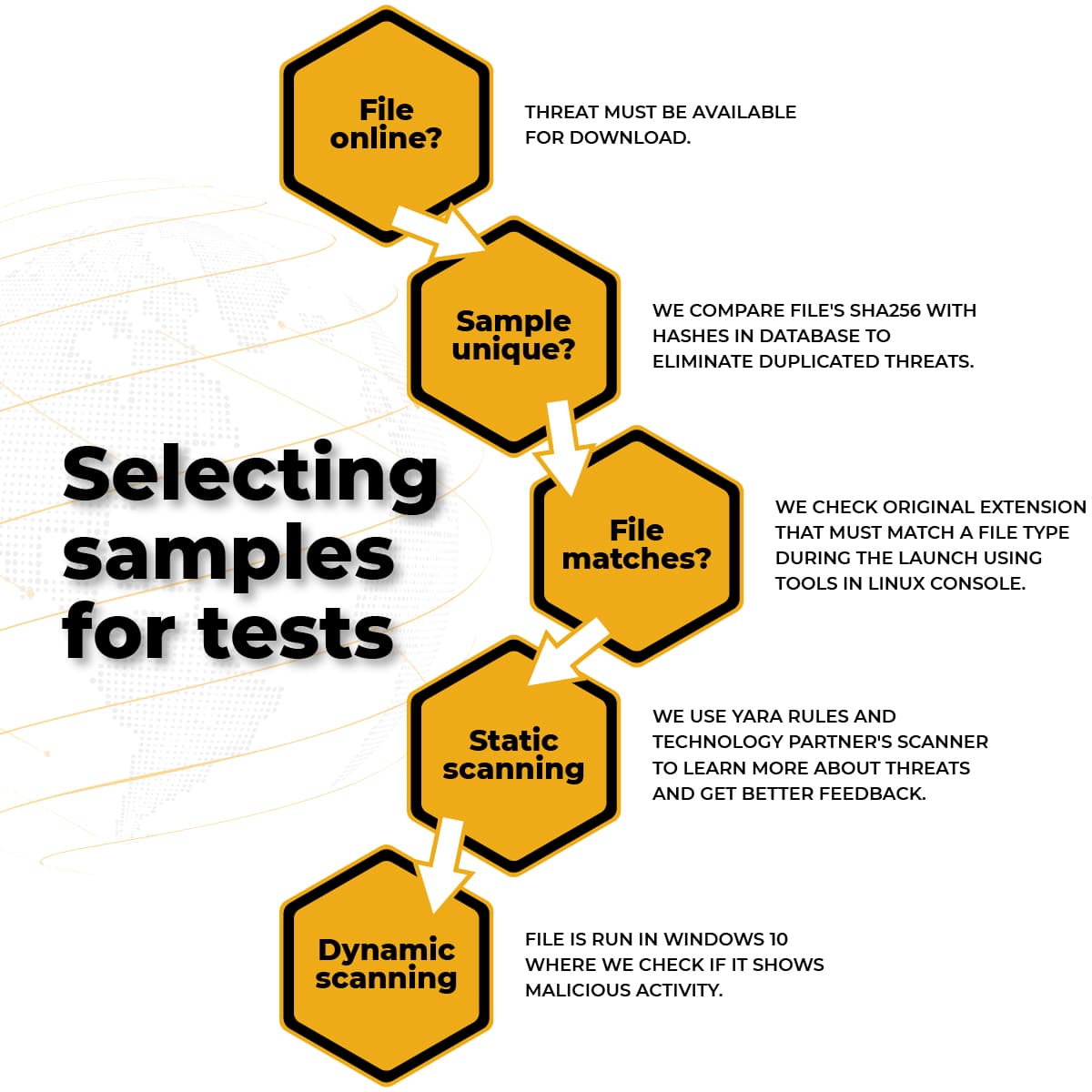

During the test in November, we used a random portion of unique malware samples from the Internet. Only the so-called “unique samples” are included in the test – each potentially malicious file is pre-checked based on a number of parameters, for example, we compare its SHA256 checksum with the existing sums in a database. As a result, from the beginning of the Advanced In The Wild Malware Test series, the same threats are not used twice.

Here are 5 steps that each file must go through before it goes to the test:

Many of the threats included in the test were distributed using the seemingly secure HTTPS protocol. It is not difficult for creators of malicious websites to quickly and without charge to implement an SSL certificate in order to increase trust of a domain. Additionally, some of the files were hosted on legitimate, hacked web servers – here, creators thrive on the reputation of a domain from where a file is downloaded to trick basic browser-level security mechanisms.

Statistics from the test

Current telemetry data shows that the average response time to a threat by all developers combined was 104 seconds. F-Secure (5.9 seconds on average), Eset (7.8 seconds ), and Avast (12.5 seconds) were the fastest at blocking and removing threats.

Not all developers have been able to detect and block every sample of in-the-wild malware. In this edition, Emsisoft Business Security received 1 negative result confirmed. The telemetry data presented in the CSV table shows detailed differences between developers in levels of detection and blocking of individual malware samples.

In the series of “Advanced In The Wild Malware Test”, we usually use the default settings of security products. Predefined settings are good, but they are not always the best for a user or organization. Therefore, for full transparency, we list the ones that we wanted to introduce for better protection, or if it is required by a given developer.

We do not use PUP/PUA samples in the test, but at home environment and in a small office, it is always a good idea to activate such feature. However, in a business environment, it is important to take advantage of all the benefits that the solution brings to protect systems and users.