The May edition of The Advanced In The Wild Malware Test has come to an end. It was another edition of the initiative, which aims to test solutions to protect Windows systems in the long term.

The threat landscape is dynamic, so we are not slowing down – since May, we have been using more sources of malware in the wild, improving our methodology and tools that are necessary for testing. Detailed changes are described in the Changelog.

Apart from effectiveness of antivirus protection, we check the so-called Remediation Time that is the time needed to response to a threat and resolve an incident.

We measure Remediation Time when there is an attempt to neutralize malware and recover from an attack. Thanks to this, we can more accurately indicate the differences between technologies used by developers.

Our tests comply with the guidelines of the Anti-Malware Testing Standards Organization. Details about the test are available at this website as well as in our methodology.

How do we evaluate the tested solutions in 3 steps?

In a nutshell, our Advanced In The Wild Malware Tests cover 3 big aspects that follow one another other and come together:

1. Selecting samples for the test

We collect malware in the form of real URLs from the Internet on an ongoing basis. We use a wide spectrum of viruses from various sources, and these are public feeds and custom honeypots. As a result, our tests cover the most up-to-date and diverse set of threats.

Being more precise, we obtain samples from low and high interactive honeypots (Dionaea, SHIVA, HoneyDB) that emulate services such as: SSH, HTTP, HTTPS, SMB, FTP, TFTP, real Windows system, e-mail server. We also use public malware sources: MWDB by CERT Polska, MalwareBazaar by Abuse.ch, and Urlquery.net.

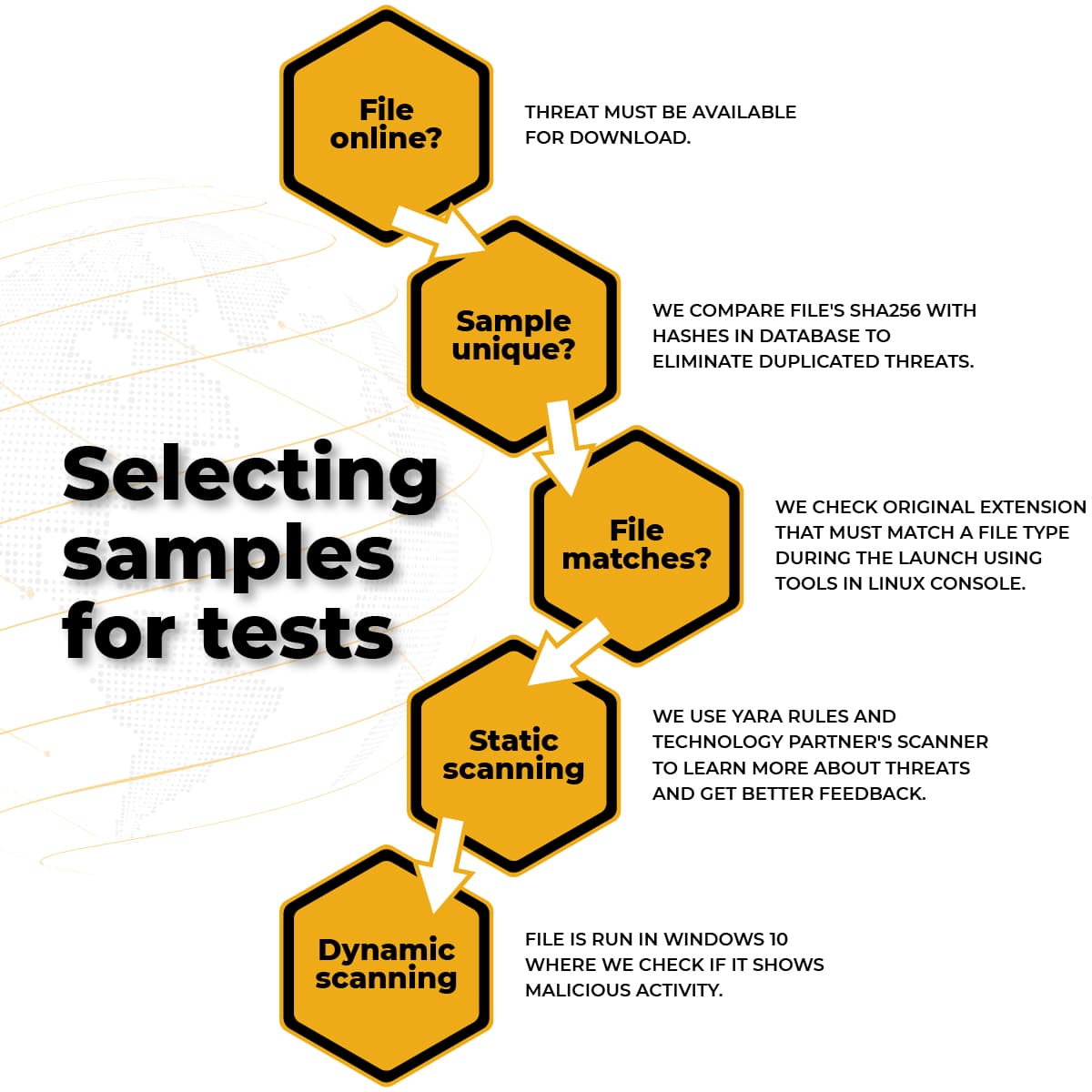

Before a malware sample URL is qualified for the test, it must be subjected to control scenarios that are described in the infographic below:

We evaluate the usefulness of each URL for the test based on the following parameters:

- Is a file online? The threat must be available online for download.

- Is a file unique? We compare the SHA256 file to hashes in the database to eliminate threat duplicates. Thanks to this, we never test two identical threats.

- Does a file fit the test? Using tools in the Linux console, we check the original file extension, which must match the file type when running in Windows.

- Static scanning. We use Yara rules and the technology partner’s scanner to learn more about a threat: to get better feedback about a file and malware family, and to eliminate samples that do not match Windows environment.

- Dynamic scanning. The file is run in Windows 10 where we check if it shows malicious activity.

If the file does not meet one of the points above, it is rejected. Another URL is subjected to a similar check.

In these 5 steps, we make sure that there are no duplicated samples in our test. This means that, for example, malware tested in January 2023 will never be qualified for the test database again. Thanks to this, our readers and developers can be sure that we are always testing unique samples.

2. Analyzing logs of malware samples and tested solutions

The analyzed samples in Windows are subject to thorough verification based on hundreds of rules – the most common techniques used by malware creators. We monitor system processes, network connections, Windows registry, and other changes made to the operating and file system to learn more about whether and what malware was doing during the analysis.

In addition to monitoring changes made by malware, we track the activity of a protection solution along with its response to threats. We also measure the remediation time of the incident.

In conclusion, the Sysmon logs are used to determine the usefulness of a malware sample for the test as well as to check the response of protection application to a given threat. Logs are necessary to quickly perform machine analysis of malware activity in Windows and the reaction of installed security software.

3. Simulating a real-world scenario

In the test, we simulate a real scenario of threat intrusion into the system through an URL via a browser. It can be a webpage prepared by a scammer or a link sent to a victim via messenger that is then opened in the system browser.

All products are tested under the same conditions using Mozilla Firefox. Thus, we are recreating a cyberattack scenario similar to the one you see in the real world, using the techniques used by actual attackers.

Results in May 2023

In May 2023, we used 416 fresh, unique malware samples from the Internet.

Tested solutions for home and small office installation:

- Avast Free Antivirus

- Bitdefender Antivirus Free

- G Data Total Security

- Kaspersky Plus

- Malwarebytes Premium

- Microsoft Defender

- Quick Heal Total Security

- Webroot Antivirus

- Xcitium Internet Security

Solutions for business and governmental institutions:

- Emsisoft Business Security

- Malwarebytes Endpoint Protection

- Xcitium ZeroThreat Advanced

Developers who wish to cooperate with us please contact us via AMTSO or directly at our contact webpage.

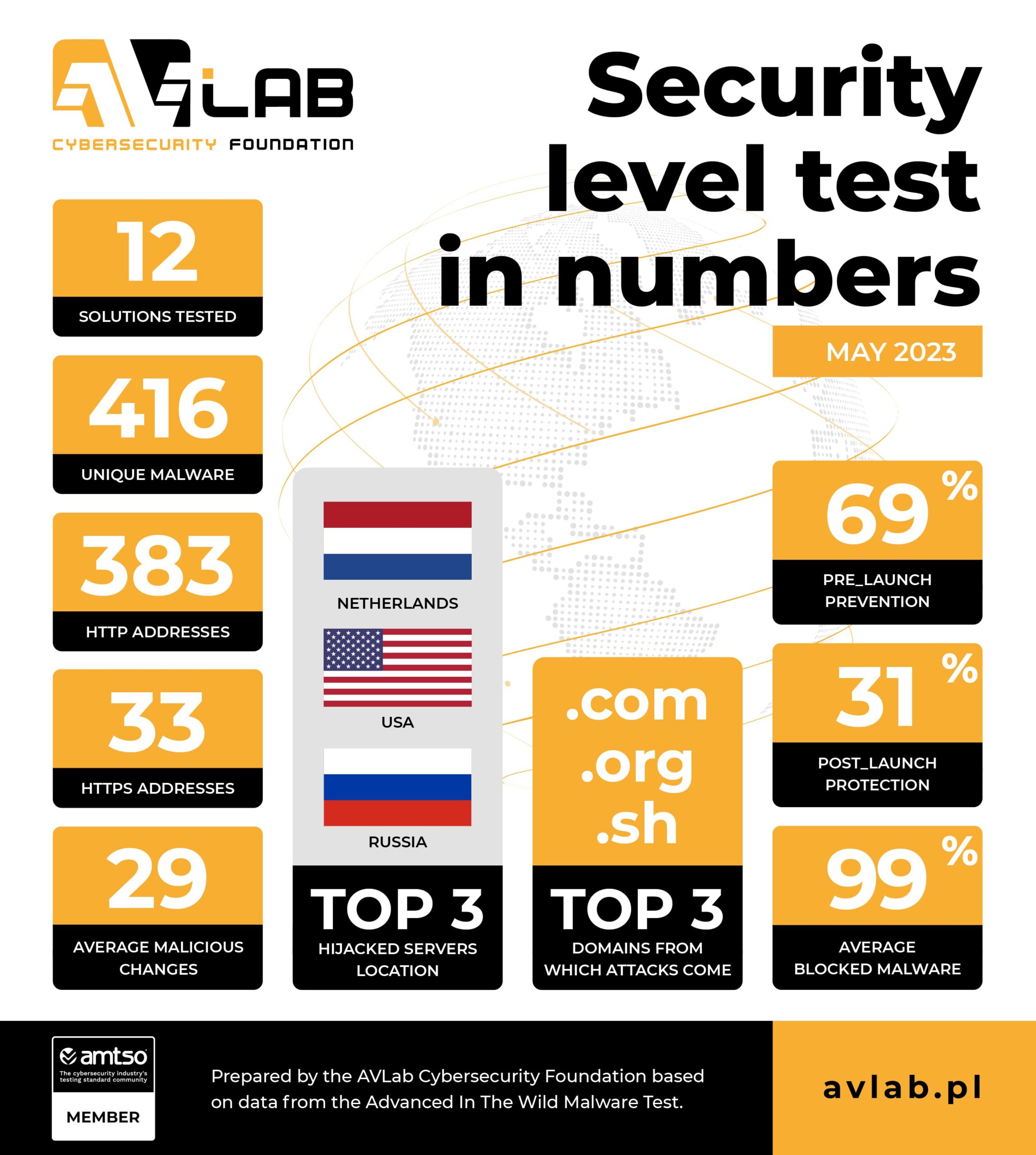

Protection test in numbers

We have prepared the following summary based on the telemetry data from the test in May 2023:

- 12 protection solutions took part in the test.

- We used 416 unique URLs with malware in total.

- Websites encrypted with HTTPS (in theory – safe) contained 33 malware samples.

- 383 malware samples were hosted with HTTP.

- During the analysis, each malware sample took on average 29 potentially harmful actions in Windows 10.

- Most malware originated from servers located in the Netherlands, US, Russia.

- The following domains were mostly used to host malware: .com, .org, .sh.

- The average detection of URLs or malicious files before launch (the PRE-Launch level) is 69%.

- The average detection of malware after launch (the POST-Launch level) is 31%.

- The average result of blocking malware by all developers is 99%.

The HTTPS protocol can be used to spread malware. Remember that the SSL certificate and the so-called padlock at the web address do not certify the security of the website!

Methodology

The detailed methodology is systematically developed by us to be up-to-date with new trends in the cybersecurity and system protection industry, which is why we provide links to the methodology:

- How do we acquire malware for testing, and how do we classify it?

- How do we collect logs from Windows and protection software?

- How do we automate our tests?

As a member of the AMTSO Group, you can be sure that our testing tools and processes comply with international guidelines and are respected by developers.

Test results

Key technical data and results are available at the RECENT RESULTS, along with a threat landscape that has been prepared on the basis of telemetry data from the test in May 2023.

In addition, from now on, we will systematically publish dedicated developer’s pages that will contain more detailed data from the test.

Here are some of them:

The list will be regularly updated.

Conclusions of the test

Note that the so-called Remediation Time is an individual-measured value expressed in seconds, and means the time of reaction to a malware sample. In our tests, we need to configure a product so that it automatically makes the best possible decisions – usually by moving threats to quarantine or blocking the connection through the firewall along with saving logs.

In larger organizations employing security specialists, the Remediation Time can be significantly greater due to human decision-making.

In theory, the less time elapses from the intrusion of malware into the system until it is noticed, and the incident is resolved, the better.

From the end user’s point of view, the most important thing is to detect suspicious activity. However, from an administrator’s perspective, the most important thing is to log the threat and attack telemetry data in the product console to quickly respond to a security incident (automatically or manually).